For 18 minutes in April, China’s state-controlled telecommunications company hijacked 15 percent of the world’s Internet traffic, including data from U.S. military, civilian organizations and those of other U.S. allies.

This massive redirection of data has received scant attention in the mainstream media because the mechanics of how the hijacking was carried out and the implications of the incident are difficult for those outside the cybersecurity community to grasp, said a top security expert at McAfee, the world’s largest dedicated Internet security company.

In short, the Chinese could have carried out eavesdropping on unprotected communications — including emails and instant messaging — manipulated data passing through their country or decrypted messages, Dmitri Alperovitch, vice president of threat research at McAfee said.

Nobody outside of China can say, at least publicly, what happened to the terabytes of data after the traffic entered China.

The incident may receive more attention when the U.S.-China Economic and Security Review Commission, a congressional committee, releases its annual report on the bilateral relationship Nov. 17. A commission press release said the 2010 report will address “the increasingly sophisticated nature of malicious computer activity associated with China.”

Said Alperovitch: “This is one of the biggest — if not the biggest hijacks — we have ever seen.” And it could happen again, anywhere and anytime. It’s just the way the Internet works, he explained. “What happened to the traffic while it was in China? No one knows.”

The telephone giants of the world work on a system based on trust, he explained. Machine-to-machine interfaces send out messages to the Internet informing other service providers that they are the fastest and most efficient way for data packets to travel. For 18 minutes April 8, China Telecom Corp. told many ISPs of the world that its routes were the best paths to send traffic.

For example, a person sending information from Arlington, Va., to the White House in Washington, D.C. — only a few miles away — could have had his data routed through China. Since traffic moves around the world in milliseconds, the computer user would not have noticed the delay.

This happens accidentally a few times per year, Alperovitch said. What set this incident apart from other such mishaps was the fact that China Telecom could manage to absorb this large amount of data and send it back out again without anyone noticing a disruption in service. In previous incidents, the data would have reached a dead end, and users would not have been able to connect.

Also, the list of hijacked data just happened to include preselected destinations around the world that encompassed military, intelligence and many civilian networks in the United States and other allies such as Japan and Australia, he said. “Why would you keep that list?” Alperovitch asked.

The incident involved 15 percent of Internet traffic, he stressed. The amount of data included in all these packets is difficult to calculate. The data could have been stored so it could be examined later, he added. “Imagine the capability and capacity that is built into their networks. I’m not sure there was anyone else in the world who could have taken on that much traffic without breaking a sweat,” Alperovitch said.

McAfee has briefed U.S. government officials on the incident, but they were not alarmed. They said their Internet communications are encrypted. However, encryption also works on a basis of trust, McAfee experts pointed out. And that trust can be exploited.

Internet encryption depends on two keys. One key is private and not shared, and the other is public, and is embedded in most computer operating systems. Unknown to most computer users, Microsoft, Apple and other software makers embed the public certificates in their operating systems. They also trust that this system won’t be abused.

Among the certificates is one from the China Internet Information Center, an arm of the China’s Ministry of Information and Industry.

“If China telecom intercepts that [encrypted message] and they are sitting on the middle of that, they can send you their public key with their public certificate and you will not know any better,” he said. The holder of this certificate has the capability to decrypt encrypted communication links, whether it’s web traffic, emails or instant messaging, Alperovitch said. “It is a flaw in the way the Internet operates,” said Yoris Evers, director of worldwide public relations at McAfee.

No one outside of China can say whether any of these potentially nefarious events occurred, Alperovitch noted. “It did not make mainstream news because it is so esoteric and hard to understand,” he added. It is not defined as a cyberattack because no sites were hacked or shut down. “But it is pretty disconcerting.”

And the hijacking took advantage of the way the Internet operates. “It can happen again. They can do it tomorrow or they can do it in an hour. And the same problem will occur again.”

Thursday, November 18, 2010

Cyber Experts Have Proof That China Has Hijacked U.S.-Based Internet Traffic

From the National Defense Magazine ...

Monday, November 15, 2010

The Plan To Quarantine Infected Computers

From Bruce Schneier's column at Forbes Magazine ...

Last month Scott Charney of Microsoft proposed that infected computers be quarantined from the Internet. Using a public health model for Internet security, the idea is that infected computers spreading worms and viruses are a risk to the greater community and thus need to be isolated. Internet service providers would administer the quarantine, and would also clean up and update users' computers so they could rejoin the greater Internet.

This isn't a new idea. Already there are products that test computers trying to join private networks, and only allow them access if their security patches are up-to-date and their antivirus software certifies them as clean. Computers denied access are sometimes shunned to a limited-capability sub-network where all they can do is download and install the updates they need to regain access. This sort of system has been used with great success at universities and end-user-device-friendly corporate networks. They're happy to let you log in with any device you want--this is the consumerization trend in action--as long as your security is up to snuff.

Charney's idea is to do that on a larger scale. To implement it we have to deal with two problems. There's the technical problem--making the quarantine work in the face of malware designed to evade it, and the social problem--ensuring that people don't have their computers unduly quarantined. Understanding the problems requires us to understand quarantines in general.

Quarantines have been used to contain disease for millennia. In general several things need to be true for them to work. One, the thing being quarantined needs to be easily recognized. It's easier to quarantine a disease if it has obvious physical characteristics: fever, boils, etc. If there aren't any obvious physical effects, or if those effects don't show up while the disease is contagious, a quarantine is much less effective.

Similarly, it's easier to quarantine an infected computer if that infection is detectable. As Charney points out, his plan is only effective against worms and viruses that our security products recognize, not against those that are new and still undetectable.

Two, the separation has to be effective. The leper colonies on Molokai and Spinalonga both worked because it was hard for the quarantined to leave. Quarantined medieval cities worked less well because it was too easy to leave, or--when the diseases spread via rats or mosquitoes--because the quarantine was targeted at the wrong thing.

Computer quarantines have been generally effective because the users whose computers are being quarantined aren't sophisticated enough to break out of the quarantine, and find it easier to update their software and rejoin the network legitimately.

Three, only a small section of the population must need to be quarantined. The solution works only if it's a minority of the population that's affected, either with physical diseases or computer diseases. If most people are infected, overall infection rates aren't going to be slowed much by quarantining. Similarly, a quarantine that tries to isolate most of the Internet simply won't work.

Fourth, the benefits must outweigh the costs. Medical quarantines are expensive to maintain, especially if people are being quarantined against their will. Determining who to quarantine is either expensive (if it's done correctly) or arbitrary, authoritative and abuse-prone (if it's done badly). It could even be both. The value to society must be worth it.

It's the last point that Charney and others emphasize. If Internet worms were only damaging to the infected, we wouldn't need a societally imposed quarantine like this. But they're damaging to everyone else on the Internet, spreading and infecting others. At the same time, we can implement systems that quarantine cheaply. The value to society far outweighs the cost.

That makes sense, but once you move quarantines from isolated private networks to the general Internet, the nature of the threat changes. Imagine an intelligent and malicious infectious disease: That's what malware is. The current crop of malware ignores quarantines; they're few and far enough between not to affect their effectiveness.

If we tried to implement Internet-wide--or even countrywide--quarantining, worm-writers would start building in ways to break the quarantine. So instead of nontechnical users not bothering to break quarantines because they don't know how, we'd have technically sophisticated virus-writers trying to break quarantines. Implementing the quarantine at the ISP level would help, and if the ISP monitored computer behavior, not just specific virus signatures, it would be somewhat effective even in the face of evasion tactics. But evasion would be possible, and we'd be stuck in another computer security arms race. This isn't a reason to dismiss the proposal outright, but it is something we need to think about when weighing its potential effectiveness.

Additionally, there's the problem of who gets to decide which computers to quarantine. It's easy on a corporate or university network: the owners of the network get to decide. But the Internet doesn't have that sort of hierarchical control, and denying people access without due process is fraught with danger. What are the appeal mechanisms? The audit mechanisms? Charney proposes that ISPs administer the quarantines, but there would have to be some central authority that decided what degree of infection would be sufficient to impose the quarantine. Although this is being presented as a wholly technical solution, it's these social and political ramifications that are the most difficult to determine and the easiest to abuse.

Once we implement a mechanism for quarantining infected computers, we create the possibility of quarantining them in all sorts of other circumstances. Should we quarantine computers that don't have their patches up to date, even if they're uninfected? Might there be a legitimate reason for someone to avoid patching his computer? Should the government be able to quarantine someone for something he said in a chat room, or a series of search queries he made? I'm sure we don't think it should, but what if that chat and those queries revolved around terrorism? Where's the line?

Microsoft would certainly like to quarantine any computers it feels are not running legal copies of its operating system or applications software.The music and movie industry will want to quarantine anyone it decides is downloading or sharing pirated media files--they're already pushing similar proposals.

A security measure designed to keep malicious worms from spreading over the Internet can quickly become an enforcement tool for corporate business models. Charney addresses the need to limit this kind of function creep, but I don't think it will be easy to prevent; it's an enforcement mechanism just begging to be used.

Once you start thinking about implementation of quarantine, all sorts of other social issues emerge. What do we do about people who need the Internet? Maybe VoIP is their only phone service. Maybe they have an Internet-enabled medical device. Maybe their business requires the Internet to run. The effects of quarantining these people would be considerable, even potentially life-threatening. Again, where's the line?

What do we do if people feel they are quarantined unjustly? Or if they are using nonstandard software unfamiliar to the ISP? Is there an appeals process? Who administers it? Surely not a for-profit company.

Public health is the right way to look at this problem. This conversation--between the rights of the individual and the rights of society--is a valid one to have, and this solution is a good possibility to consider.

There are some applicable parallels. We require drivers to be licensed and cars to be inspected not because we worry about the danger of unlicensed drivers and uninspected cars to themselves, but because we worry about their danger to other drivers and pedestrians. The small number of parents who don't vaccinate their kids have already caused minor outbreaks of whooping cough and measles among the greater population. We all suffer when someone on the Internet allows his computer to get infected. How we balance that with individuals' rights to maintain their own computers as they see fit is a discussion we need to start having.

Anatomy Of An Attempted Malware Scam

I stumbled across this fascinating inside account of how cyber criminals infiltrate online advertising by Julia Casale-Amorim of Castle Media. Try not to get lost in the technical jargon of the advertising world and instead focus on the criminal's cleverness and level of effort.

The display media segment is the newest target of malvertising, the latest trend in online criminal methodology. The problem has escalated in recent months and despite many suppliers' best efforts, it continues to grow. The culprits behind many of these attacks are based in foreign states leaving little course to take action. While the best defense against malvertising is to prevent it from happening in the first place, this has proven to be a challenge for even the most astute publishers, networks and the like.

We were recently the targets of one such attempt, and while it certainly wasn't the first "fake agency" we've been besieged by (and that we've successfully stopped), it is one of the most organized efforts we've encountered so far. Below we've outlined the approach that was used and the findings of our investigation as an FYI to others who may be on the target list.

If there's anything we've learned since the practice of malvertising has surfaced (and has since proliferated), it's that you can't be too detailed with your client background checks and creative reviews. We've always been big on our screening procedures, and these days it's proving to be an increasingly valuable practice. Malvertising reflects negatively on the entire online media industry and the onus has to fall on us (suppliers) to put a stop to it. So, we want to share our learnings here for the greater community to hopefully benefit from.

Here is a breakdown of the approach used by the individuals behind our most recent malware experience, how we caught them, and the findings of our subsequent investigation. We've also highlighted some pink flags (and the ultimate red flag) that came up along the way, as well as our key takeaways from the experience including some of the steps we now have in place (and which you may want to consider implementing) to help us identify similar perpetrators sooner than later.

Initial contact, proposal and campaign review

The culprits approached us in early July representing themselves as an agency looking to place a campaign for both a big name charity and a travel client (we are omitting names to protect their brands from being associated with this scam. We have no reason to believe they were involved). Following our proposal phase, "Bellas," informed us that the big name charity was still "undergoing approval phase", but that their travel client had approved a test on our network and wanted to proceed.

(Pink flag: while not completely implausible, it is rare for an unknown agency to bring one or more large brands to the table, let alone doing so without first undergoing a formal RFI/RFP process.)

Despite the pink flag, we proceeded, and because we had never worked with this agency before, we began by processing their request for credit. Each of the references provided had professionally produced websites and unique phone numbers -- nothing at the surface level that would raise any suspicion. The bank reference was real (a real bank, that is) and the phone number provided worked. The information we requested was supplied to us in an official, expected manner. Nothing out of the ordinary here.

All three references we contacted provided prompt and friendly responses and each reported that they had been doing business with Bellas for anywhere between two years and six months at fairly respectable sums.

For added assurance, the "fake agency" supplied us with a PDF which was represented as an official document of incorporation.

With no glaring reason to deny, we approved their application for limited starter credit and proceeded to the next step, campaign setup.

Campaign Setup and QA

The campaign's goals were a little unusual for what we would typically consider to be a direct response advertiser:

We are really focused on reach and unique viewers optimizations. Thus tight frequency cap like 1/24 or 1/48 can work. CTR is secondary goal at this point. A lot of people don't know much about client services and we want to cover every single possible customer.

We logged their goals and rationale. We also noted them as a pink flag. The proprietors of these scams typically focus more on unique reach and frequency than on targeting, audience or optimization - a focus that, in general terms, is most unusual for the average online advertiser. Of course, in hindsight, their interest in unique reach stemmed from their desire to infect across the widest possible net.

On our initial request for creative, "Bellas" provided us with a set of third-party tags, which were rejected because they were not from one of our certified ad serving vendors.

We were then provided with raw creative files. While the creative were clean (i.e. no malicious code), there were some minor design flaws including missing borders and file sizes that exceed our standard maximums. We informed them of these issues and they responded:

We are currently run[ning] with AOL and Yahoo (including comscore 1-150 pubs) and they are cool.

Hum, really? AOL and Yahoo have some of the strictest ad specs around...(pink flag).

After some lengthy back and forth about the creative revisions...

We are not able to reduce creative size without sacrificing quality. If you cannot run creative size more than 20kb -- we can host. If not -- we wont be able to proceed with campaign.

"Bellas," at that point, requested that we run an impression tracking URL. The "OpenX" URL provided to us was flagged during our QA review, another pink flag; the formatting and characters were not consistent with the standard employed by OpenX. We informed Bellas that to use the URL we would need to perform a few modifications to make it consistent with the standard. We provided an example of the modified URL and then received the following responses:

I have contacted OpenX support to find out. Meanwhile I got another pixel for you. We have used it with our hosted campaigns and it worked wonders.

Client prefers Eyeblaster tracking URL (their ad server). Would be cool if you can implement. If not -- OpenX is perfectly fine.

Next, Bellas, informed us of the "response" they received from "OpenX support" and then supplied us with a new pixel to use.

"Hi Henry. Looks like Casale runs , which is NOSCRIPT part of the code, instead of JS pixel (script part), that affects reporting a bit and you cannot add any additional tracking code." Are you able to implement JS OpenX pixel or Eyeblaster pixel directly? Alternatively, we can provide tags.

After informing "Bellas" that we would forward the new pixel to our traffic team for evaluation, we received the following response...

Client have sent another pixel, from zedo.

Pink flag. So now we have a client who wanted to serve through OpenX, then Eyeblaster, and now Zedo? Really? We reviewed the Zedo tracking URL and asked for confirmation about a few details since it did not conform to the ad server's standard. They replied,

For JS pixel to work properly, you need to load is exactly like that ... Will work.

Red flag! The set of tags provided were imitation tags. We ended discussions with the client at this point since things were just not adding up, and launched a detailed investigation to confirm our suspicions.

During our investigation we discovered the phone number provided in the credit application was not a legit phone number for the bank. We also learned that the domains of each of the references provided were registered within two days of each other... and that the registrations took place only days before Bellas Interactive's request for credit was issued - despite the fact that the references "claimed" to be working with Bellas across a 6-24 month spread. And finally, the Bellas Interactive website claimed to be in operation since 1994, despite the fact that the domain was registered in April of this year.

In Summary

Entities like this are cunning and smart. Their scams are well thought through and executed. The best defence against them is rigorous proactive screening. You have to be really, really astute. Question everything. These guys know the industry lingo, procedures and have created a false environment designed specifically to validate their non-existence. Even the most insignificant detail can be a huge clue.

Our Lessons Learned and Advice for Others

Perform independent fact checking.

Don't take the information provided to you on bank/credit reference applications at face value. Perform a few spot checks to validate the sources. If, when we looked up the bank reference, we had cross referenced the phone number provided by Bellas with the numbers listed on the bank's website, we would have exposed a major crack in their armour upfront, which would have saved us a lot of wasted time and effort.

Research. Then research some more.

Make it SOP to do research on not only the agency in question, but the credit references provided to you. Search for them online, do a WHOIS lookup on the domains, ask around. Make certain that everything adds up. You can't be too cautious.

When the going gets tough...

If a client is difficult to work with, there's probably a reason for it. Standards exist for a reason. Any account that is operating outside the norms should register as an immediate red flag to you. Issues surrounding pixels, creative design, obsession over going live too quickly with no sound rational or justification...any of these examples should set alarm bells off in your head!

Be suspicious.

Perception is selective. It's natural for small details to escape us when we're not on guard or actively looking for something. It's also easy to get overly comfortable with the mechanics of a standard procedure. If you approach every new account with suspicion, you'll be far more aware of any detail that may seem out of place.

Don't assume. Question and verify.

Certify third party ad servers that you are willing to deliver through, and keep clear lines of communication open with them at all times. Store tag templates and use them in your QA/review process. If a tag deviates from the standard template that you typically see from a third party ad server, escalate to them for an opinion. Never assume that the template has changed, always question it.

Re-examine critical points in your new account process.

When an account is new, consider minimizing the involvement of your sales staff in the review and verification process. In some cases, a sales person's thirst for new revenue can hamper their nose for suspicious behavior.

Sunday, November 14, 2010

USAA Credential Phishing

Security company M86 blogs about a sophisticated phishing attack targeting members of the USAA. Would you have spotted this attack?

Today we started seeing a new phishing campaign which is being sent by the Cutwail spambot, targeting customers of the United States Automobile Association (USAA). Cutwail is the spamming component installed by the Pushdo botnet. The phishing emails ask the recipient to fill out a ‘confirmation form’ which they can access by clicking on a link in the message.

To hide the URL of the phishing web page, these emails contain a link to one of several different URL shortening services such as http://bit.ly which redirect the browser to the actual phishing page.

The link ‘Access USAA Confirmation Form’ in the spam email above points to http://bit . ly/agWGNG. When we tested this link, bit.ly had already determined that there may be a problem with the URL it was redirecting to and displayed a warning page rather than redirecting us to the phishing page.

If we choose to ignore this warning and continue to the un-shortened URL, we end up at the page below, a phishing website aimed at stealing information from USAA members. This page, titled ‘Cardholder Form’, asks the user to provide information such as their online ID, password, name, card number, card security code and PIN. When the user clicks the submit button all of the details are sent to the criminals’ server and the users’ browser is redirected to the real USAA website.

For now, this phishing site, which is hosted on the domain vsdfile (dot) ru is not serving up any malicious content. The USAA provides a banking and credit card service which may be the intended target of these criminals once they have tricked a customer into divulging their cardholder details.

We have not seen one of these large scale phishing campaigns from Cutwail for some time, as the cybercriminals switched to spamming out links to the data-stealing Zeus malware. With the recent high profile arrests of several Zeus perpetrators, and all the subsequent public attention on Zeus, maybe phishing, where you politely ask for data instead of stealing it, will come back in fashion?

Pentagon is debating cyber-attacks

Fascinating article by the Washington Post's Ellen Nakashima detailing the policy debate surrounding the use of offensive cyber warfare. Some interesting excerpts from the article include ...

Read the full article here.

Cyber Command's chief, Gen. Keith B. Alexander, who also heads the National Security Agency, wants sufficient maneuvering room for his new command to mount what he has called "the full spectrum" of operations in cyberspace.

Offensive actions could include shutting down part of an opponent's computer network to preempt a cyber-attack against a U.S. target or changing a line of code in an adversary's computer to render malicious software harmless. They are operations that destroy, disrupt or degrade targeted computers or networks.

But current and former officials say that senior policymakers and administration lawyers want to limit the military's offensive computer operations to war zones such as Afghanistan, in part because the CIA argues that covert operations outside the battle zone are its responsibility and the State Department is concerned about diplomatic backlash.

The administration debate is part of a larger effort to craft a coherent strategy to guide the government in defending the United States against attacks on computer and information systems that officials say could damage power grids, corrupt financial transactions or disable an Internet provider.

The effort is fraught because of the unpredictability of some cyber-operations. An action against a target in one country could unintentionally disrupt servers in another, as happened when a cyber-warfare unit under Alexander's command disabled a jihadist Web site in 2008. Policymakers are also struggling to delineate Cyber Command's role in defending critical domestic networks in a way that does not violate Americans' privacy.

Read the full article here.

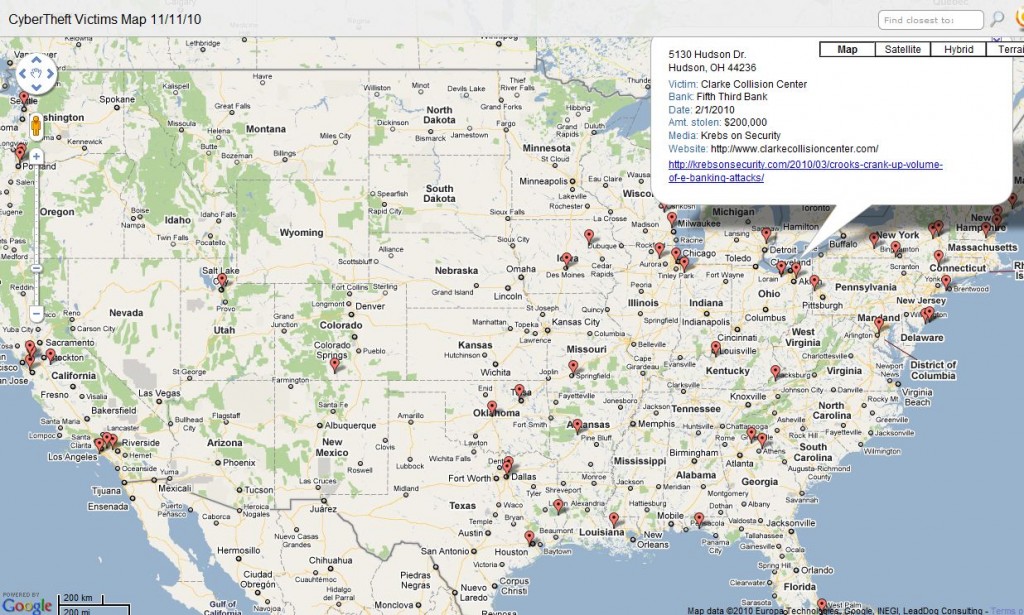

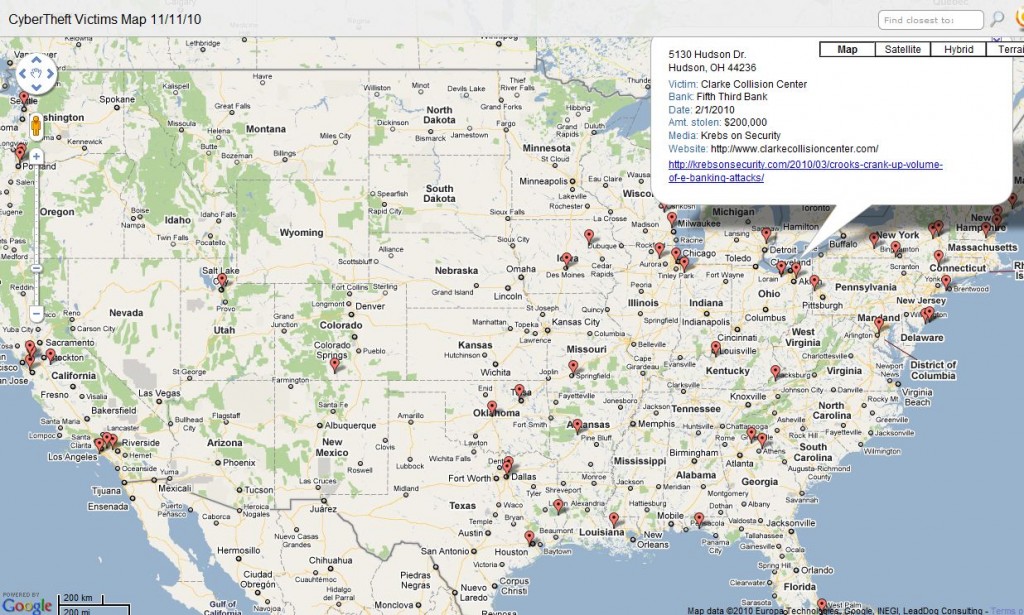

Mapping Attacks Against Online Banks

From Krebsonsecurity.com ...

Several readers have asked to be notified if the U.S. map showing recent victims of high-dollar online banking thefts was updated. Below is a (non-interactive) screen shot of the updated, interactive map that lives here. Click the red markers to see more detail about the victim at that location, including a link to a story about the attack.

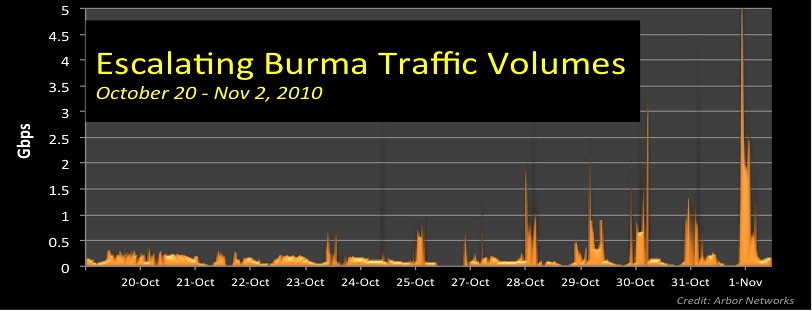

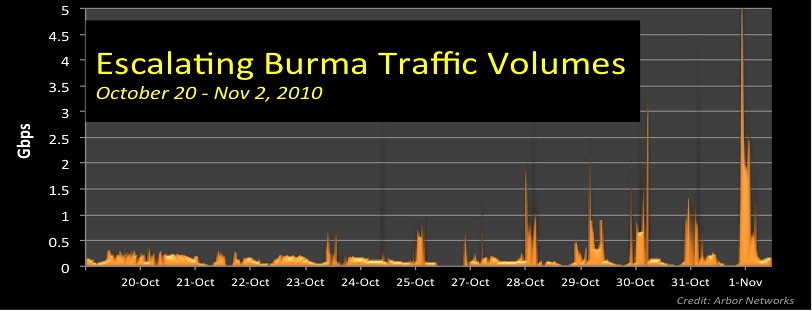

Attack Severs Burma Internet

From Arbor Networks ....

Back in 2007, the Burmese government reportedly severed the country’s Internet links in a crackdown over growing political unrest.

Yesterday, Burma once again fell off the Internet. Over the last several days, a rapidly escalating, large-scale DDoS has targeted Burma’s main Internet provider, the Ministry of Post and Telecommunication (MPT), disrupting most network traffic in and out of the country.

While the motivation for the attack is unknown, Twitter and Blogs have been awash in speculation ranging from blaming the Burma / Myanmar government (preemptively disrupting Internet connectivity ahead of the November 7 general elections) to external attackers with still mysterious motives. The Myanmar Times reports the attack has been ongoing since October 25th (and adds the attack may impact Burma’s tourist industry).

We estimate the Burma DDoS between 10-15 Gbps (several hundred times more than enough to overwhelm the country’s 45 Mbps T3 terrestrial and satellite links). The DDoS includes dozens of individual attack components (e.g. TCP syn, rst flood) against multiple IP addresses within MPT’s address blocks (203.81.64.0/19, 203.81.72.0/24, 203.81.81.0/24 and 203.81.82.0/24). The attack also appears fairly well-distributed — ATLAS data shows attack traffic across 20 or more providers with a broad range of source addresses.

While DDoS against e-commerce and commercial sites are common (hundreds per day), large-scale geo-politically motivated attacks — especially ones targeting an entire country — remain rare with a few notable exceptions. At 10-15 Gbps, the Burma attack is also significantly larger than the 2007 Georgia (814 Mbps) and Estonia DDoS. Early this year, Burmese dissident web sites (hosted outside the country) also came under DDoS attacks.

At present I do not know the motives for this attack but our past DDoS analysis have observed the gamut from politically motivated DDoS, government censorship, extortion and stock manipulation. I’ll update this blog if I get more details.

US internet hosts are linchpin of criminal botnets

From the New Scientist ...

WHILE criminal gangs in Russia and China are responsible for much of the world's cybercrime, many of the servers vital to their activities are located elsewhere. An investigation commissioned by New Scientist has highlighted how facilities provided by internet companies in the US and Europe are crucial to these gangs' activities.

Researchers at Team Cymru, a non-profit internet security company based in Burr Ridge, Illinois, delved into the world of botnets - networks of computers that are infected with malicious software. Millions of machines can be infected, and their owners are rarely aware that their computers have been compromised or are being used to send spam or steal passwords.

Several botnets have been linked to gangs based in Russia, where police have a poor record on tackling the problem. But to manage their botnets these gangs often seem to prefer to use computers, known as command-and-control (C&C) servers, in western countries. More than 40 per cent of the 1500 or so web-based C&C servers Team Cymru has tracked this year were in the US. When it comes to hosting C&C servers, "the US is significantly ahead of anyone else", says Steve Santorelli, Team Cymru's director of global outreach in San Diego.

Santorelli and his colleagues also detected a daily average of 226 C&C servers in China and 92 in Russia. But European countries not usually linked with cybercrime were in a similar range, with an average of 120 C&C servers based in Germany and 64 in the Netherlands.

Internet hosts in western countries appeal to criminals for the same reasons that regular computer users like them, says Santorelli: the machines are extremely reliable and enjoy high-bandwidth connections. Team Cymru's research did not identify which companies are hosting botnet servers, but Santorelli says the list would include well-known service providers.

The use of US-based C&C servers to control botnets is a source of frustration to security specialists, who have long been aware of the problem. It is happening even though most hosting companies shut down C&C servers as soon as they receive details of botnet activity from law enforcement agencies and security firms. "When we see an AT&T address serving as a botnet control point, we take it very seriously," says Michael Singer, an executive director at AT&T.

Despite these efforts, the criminals can quickly re-establish control by setting up a new C&C server with a different company, often using falsified registration information and stolen credit card details.

Hosting companies deal with botnets on a voluntary basis at present. They might be more vigilant if required to act by law, but that would create its own regulatory problems, Santorelli says. "The cops don't run or govern the internet after all, and neither do they want to," he says. For legal controls to work, it would be necessary to define who has the authority to decide whether a server is part of a botnet, and how requests from authorities abroad are dealt with.

Jeffrey Carr of security firm Taia Global, based in Washington DC, says that some less well-known providers have been warned about botnet activity on many occasions, but drag their heels when asked to shut down the criminals' servers.

The problem arises partly because web hosting can be a big earner for some firms. "They're generating millions of dollars in income," says Carr. Improvements in security, such as requiring service providers to verify the details of people who rent server facilities, could well hurt these firms' bottom line.

Nobel Peace Prize, Amnesty HK and Malware

From Nart Villeneuve at SecDev.cyber ...

There have been two recent attacks involving human rights and malware. First, on November 7, 2010, contagiodump.blogspot.com posted an analysis of a malware attack that masqueraded as an invitation to attend an event put on by the Oslo Freedom Forum for Nobel Peace Prize winner Liu Xiaobo. The malware exploited a known vulnerability (CVE-2010-2883) in Adobe Reader/Acrobat. The Committee to Protect Journalists was hit by the same attack.

On November 10, 2010 Websense reported that website of Amnesty Hong Kong was compromised and was delivering an Internet Explorer 0day exploit (CVE-2010-3962) to visitors. In addition, Websense reports that the same malicious server was serving three additional exploits: a Flash exploit (CVE-2010-2884), a QuickTime exploit (CVE-2010-1799) and a Shockwave exploit (CVE-2010-3653).

The malicious domain name hosting the exploits mailexp.org (74.82.168.10) has been serving malware since Sept. 2010. The domain mailexp.org was registered in May 2010 to y_yum22@yahoo.com. mailexp.org was formerly hosted on 74.82.172.221 which now hosts the Zhejiang University Alumni Association website.

The malware dropped from the Internet Explorer exploit (CVE-2010-3962)

scvhost.txt

MD5: ca80564d93fbe6327ba6b094ae3c0445 VT: 2 /43

The malware dropped from the Flash exploit (CVE-2010-2884)

hha.exe

MD5: 0da04df8166e2c492e444e88ab052e9c VT: 2 /43

The malware dropped from the QuickTime exploit (CVE-2010-1799)

qq.exe

MD5: 3e54f1d3d56d3dbbfe6554547a99e97e VT: 16 /43

The malware dropped from the Shockwave exploit (CVE-2010-3653)

pdf.exe

MD5: 3a459ff98f070828059e415047e8d58c VT: 0/43

Both ca80564d93fbe6327ba6b094ae3c0445 and 3a459ff98f070828059e415047e8d58c perform a DNS lookup for ns.dns3-domain.com, which is an alias for centralserver.gicp.net which resolves to 221.218.165.24 (China Unicom Beijing province network).

The domain name “ns.dns3-domain.com” has been associated with a variety of malware going back to May 2010. This domain name, dns3-domain.com is registered to zhanglei@netthief.net, the developer of the NetThief RAT.

Malware attacks leveraging human rights issues are not new. I have been documenting them for some time (see, Human Rights and Malware Attacks, Targeted Malware Attack on Foreign Correspondent’s based in China, “0day”: Civil Society and Cyber Security). However, one of the issues that Greg Walton and I raised last year, is a trend toward using the real web sites of human rights organizations compromised and as vehicles to deliver 0day exploits to the visitors of the sites – many of whom may be staff and supporters of the specific organization. Unfortunately, we can expect this to continue.

Monday, November 8, 2010

Google Hacking SCADA

Interesting Tweet from Ruben Santamarta at reversemode.com.

It's not a good idea to expose a SCADA Control Center of Wind Turbines in a public subdomain http://is.gd/gNLts

Basically, Ruben found the login page to an Industrial Control System ... ouch!

You can follow Ruben on Twitter here @reversemode

It's not a good idea to expose a SCADA Control Center of Wind Turbines in a public subdomain http://is.gd/gNLts

Basically, Ruben found the login page to an Industrial Control System ... ouch!

You can follow Ruben on Twitter here @reversemode

Metasploit and SCADA exploits: dawn of a new era?

Courtesy Shawn Merdinger

On 18 October, 2010 a significant event occurred concerning threats to SCADA (supervisory control and data acquisition) environments.

That event is the addition of a zero-day exploit for the RealFlex RealWin SCADA software product into the Metasploit repository. Here are some striking facts about this event:

First, the SCADA community can expect to see an explosion of vulnerabilities and accompanying exploits against SCADA devices in the near future.

Personally, I expect we will see in the next 12 months at least a doubling of the known 16 SCADA vulnerabilities documented in NIST’s National Vulnerability Database.

Second, the diverse information sources that SCADA vulnerabilities may appear must be vigilantly monitored by numerous organizations and security researchers.

Afaik, the first widely-disseminated information on the RealFlex RealWinbuffer overflow occurred on 1 November, when I sent the information to the SCADASEC mailing list.

Third, people should recognize that the recent Stuxnet threat has cast a light on SCADA security issues. Put bluntly, there is blood in the water.

Quite a few people, companies and other organizations are currently investigating SCADA product security, buying equipment and conducting security testing for a number of differing interests and objectives.

I expect SCADA security issues will be the shiny hot topic on the 2011 security and hacker conference circuit, both in the US and abroad.

Fourth, understand that because of the current broken business model, security researchers are often frustrated by software vendors’ action, or inaction, when it comes to reporting vulnerabilities.

Often, there is no security point-of-contact at the vendor. Even worse, the technical support who are contacted by the security researcher often do not understand the technical and security implications of the issue reported.

And it is worth mentioning that a vendor acknowledging a product security issue is then“on the hook” — so there is incentive for the vendor to dismiss the vulnerability report.

Even in the case of specialty SCADA security shops reporting vulnerabilites to the vendor, we are seeing documented cases of “vendor spin” furthering the bad blood between vendors and ethical research.

All of these factors lead to frustrated security researchers, some of whom will simply expose the vulnerability and exploit to the world, rather than take a disclosure path through a CERT.

Fifth, folks should recognize that attack frameworks like Metasploit enable a never-before-seen level of integration of these kinds of targeted critical infrastructure-relate exploits into a powerful tool.

For a kinetic metaphor, Metasploit is akin to a.50 caliber sniper rifle, and a zero-day SCADA vulnerability is equivalent to a .50 caliber depleted uranium round for that rifle.

As a SCADA end user, what are you to do?

I recommend the following, at a minimum: push your vendors to have a product security POC and process, monitor resources like SCADASEC, keep current with tools like Metasploit, receive vulnerability notifications from appropriate CERT organizations like ICS-CERT.

On 18 October, 2010 a significant event occurred concerning threats to SCADA (supervisory control and data acquisition) environments.

That event is the addition of a zero-day exploit for the RealFlex RealWin SCADA software product into the Metasploit repository. Here are some striking facts about this event:

- This was a zero-day vulnerability that unfortunately was not reported publicly, to a organization like ICS-CERT or CERT/CC, or (afaik) to the RealFlex vendor.

- This exploit was not added to the public Exploit-DB site until 27 October, 2011.

- The existence of this exploit was not acknowledged with a ICS-CERT advisory until 1 November, 2010.

- This is the first SCADA exploit added to Metasploit.

- So what are the lessons learned and takeaways from this seminal event?

First, the SCADA community can expect to see an explosion of vulnerabilities and accompanying exploits against SCADA devices in the near future.

Personally, I expect we will see in the next 12 months at least a doubling of the known 16 SCADA vulnerabilities documented in NIST’s National Vulnerability Database.

Second, the diverse information sources that SCADA vulnerabilities may appear must be vigilantly monitored by numerous organizations and security researchers.

Afaik, the first widely-disseminated information on the RealFlex RealWinbuffer overflow occurred on 1 November, when I sent the information to the SCADASEC mailing list.

Third, people should recognize that the recent Stuxnet threat has cast a light on SCADA security issues. Put bluntly, there is blood in the water.

Quite a few people, companies and other organizations are currently investigating SCADA product security, buying equipment and conducting security testing for a number of differing interests and objectives.

I expect SCADA security issues will be the shiny hot topic on the 2011 security and hacker conference circuit, both in the US and abroad.

Fourth, understand that because of the current broken business model, security researchers are often frustrated by software vendors’ action, or inaction, when it comes to reporting vulnerabilities.

Often, there is no security point-of-contact at the vendor. Even worse, the technical support who are contacted by the security researcher often do not understand the technical and security implications of the issue reported.

And it is worth mentioning that a vendor acknowledging a product security issue is then“on the hook” — so there is incentive for the vendor to dismiss the vulnerability report.

Even in the case of specialty SCADA security shops reporting vulnerabilites to the vendor, we are seeing documented cases of “vendor spin” furthering the bad blood between vendors and ethical research.

All of these factors lead to frustrated security researchers, some of whom will simply expose the vulnerability and exploit to the world, rather than take a disclosure path through a CERT.

Fifth, folks should recognize that attack frameworks like Metasploit enable a never-before-seen level of integration of these kinds of targeted critical infrastructure-relate exploits into a powerful tool.

For a kinetic metaphor, Metasploit is akin to a.50 caliber sniper rifle, and a zero-day SCADA vulnerability is equivalent to a .50 caliber depleted uranium round for that rifle.

As a SCADA end user, what are you to do?

I recommend the following, at a minimum: push your vendors to have a product security POC and process, monitor resources like SCADASEC, keep current with tools like Metasploit, receive vulnerability notifications from appropriate CERT organizations like ICS-CERT.

Subscribe to:

Comments (Atom)